As CTO of The Rumie Initiative, I spend a decent amount of time trying to find tech solutions that offer “the most for the least” in terms of computing power vs. cost.

There is one thing that has baffled me in my long career in technology.

Hardware capability increases exponentially, but software somehow just bloats up, using the power and space, without providing much more functionality or value.

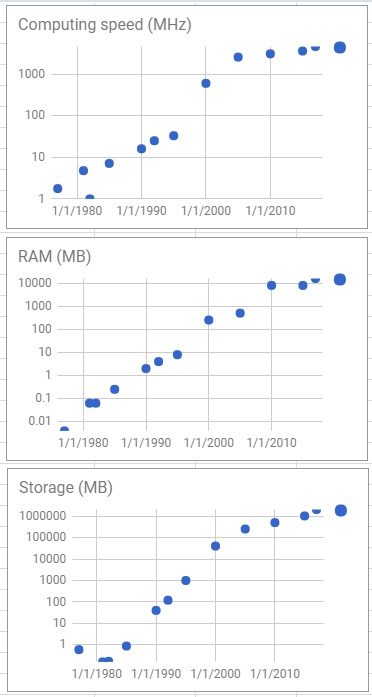

Computer specs from the past 30 years

As you can see, we’ve enjoyed exponential growth in terms of computer power and storage:

And somehow our software is still clunky and slow.

The Blogger tab I have open to write this post is currently using over 500MB of RAM in Chrome.

How is that even possible?

I’ve read many complaints about this over the years. Usually the blame is put on “lazy developers” – a group of which I proudly call myself a member :-)

You can now buy a powerful general purpose computer with 512MB RAM and a 1GHz processor (considered high-end in the mid-2000s) for five bucks.

So why am I writing this on a PC with 16GB of RAM?*

I think there might be some opportunities in the software industry to move “backwards” a bit, and look at ways we can more effectively use all of this amazingly cheap processing capability.

There have been a few attempts at a Software Minimalism movement, but they seemingly haven’t gained much traction.

So what are the underlying problems?

What are some potential solutions?

Are there projects in this space that I’m not aware of?

Or is is just inevitable that we’ll keep writing software to fill up the ever-expanding space those amazing hardware engineers keep coming up with?

P.S. My Blogger tab is now using 750MB of RAM. Is that really reasonable?

*To be fair, the RAM is needed for the JetBrains IDEs I use frequently use. But I remember Microsoft Visual Studio back in the late 90s with similar capabilities that ran on machines with 16MB of RAM – a mere 1/1000th of what we have now. (reference)